Intermittent Oranges (tests which fail sometimes and pass other times) are an ever increasing problem with test automation at Mozilla.

While there are many common causes for failures (bad tests, the environment/infrastructure we run on, and bugs in the product)

we still do not have a clear definition of what we view as intermittent. Some common statements I have heard:

- “It’s obvious, if it failed last year, the test is intermittent“

- “If it failed 3 years ago, I don’t care, but if it failed 2 months ago, the test is intermittent“

- “I fixed the test to not be intermittent, I verified by retriggering the job 20 times on try server“

These are imply much different definitions of what is intermittent, a definition will need to:

- determine if we should take action on a test (programatically or manually)

- define policy sheriffs and developers can use to guide work

- guide developers to know when a new/fixed test is ready for production

- provide useful data to release and Firefox product management about the quality of a release

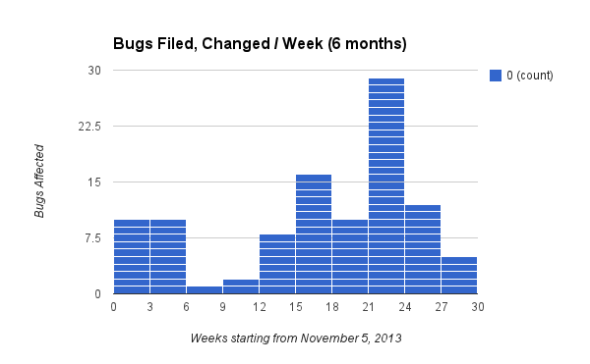

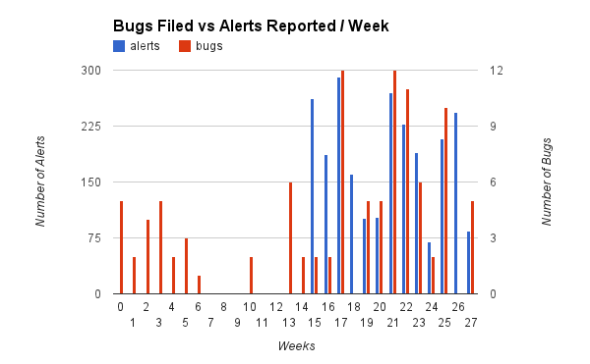

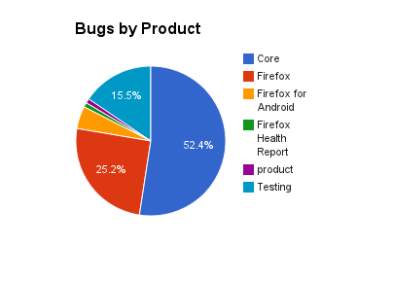

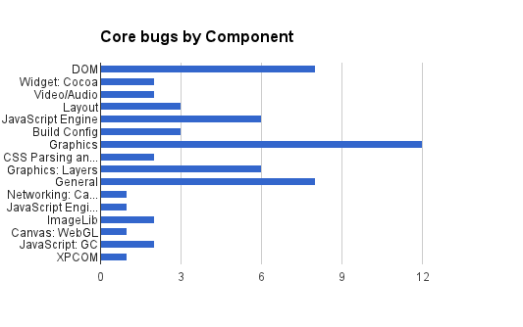

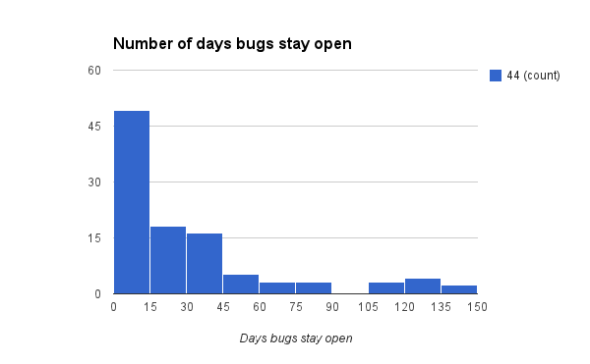

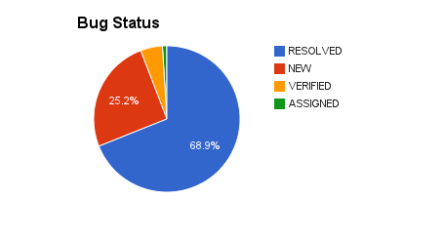

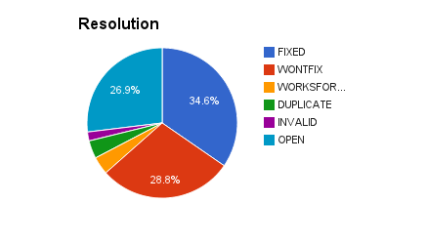

Given the fact that I wanted to have a clear definition of what we are working with, I looked over 6 months (2016-04-01 to 2016-10-01) of OrangeFactor data (7330 bugs, 250,000 failures) to find patterns and trends. I was surprised at how many bugs had <10 instances reported (3310 bugs, 45.1%). Likewise, I was surprised at how such a small number (1236) of bugs account for >80% of the failures. It made sense to look at things daily, weekly, monthly, and every 6 weeks (our typical release cycle). After much slicing and dicing, I have come up with 4 buckets:

- Random Orange: this test has failed, even multiple times in history, but in a given 6 week window we see <10 failures (45.2% of bugs)

- Low Frequency Orange: this test might fail up to 4 times in a given day, typically <=1 failures for a day. in a 6 week window we see <60 failures (26.4% of bugs)

- Intermittent Orange: fails up to 10 times/day or <120 times in 6 weeks. (11.5% of bugs)

- High Frequency Orange: fails >10 times/day many times and are often seen in try pushes. (16.9% of bugs or 1236 bugs)

Alternatively, we could simplify our definitions and use:

- low priority or not actionable (buckets 1 + 2)

- high priority or actionable (buckets 3 + 4)

Does defining these buckets about the number of failures in a given time window help us with what we are trying to solve with the definition?

- Determine if we should take action on a test (programatically or manually):

- ideally buckets 1/2 can be detected programatically with autostar and removed from our view. Possibly rerunning to validate it isn’t a new failure.

- buckets 3/4 have the best chance of reproducing, we can run in debuggers (like ‘rr’), or triage to the appropriate developer when we have enough information

- Define policy sheriffs and developers can use to guide work

- sheriffs can know when to file bugs (either buckets 2 or 3 as a starting point)

- developers understand the severity based on the bucket. Ideally we will need a lot of context, but understanding severity is important.

- Guide developers to know when a new/fixed test is ready for production

- If we fix a test, we want to ensure it is stable before we make it tier-1. A developer can use math of 300 commits/day and ensure we pass.

- NOTE: SETA and coalescing ensures we don’t run every test for every push, so we see more likely 100 test runs/day

- Provide useful data to release and Firefox product management about the quality of a release

- Release Management can take the OrangeFactor into account

- new features might be required to have certain volume of tests <= Random Orange

One other way to look at this is what does gets put in bugs (war on orange bugzilla robot). There are simple rules:

- 15+ times/day – post a daily summary (bucket #4)

- 5+ times/week – post a weekly summary (bucket #3/4 – about 40% of bucket 2 will show up here)

Lastly I would like to cover some exceptions and how some might see this flawed:

- missing or incorrect data in orange factor (human error)

- some issues have many bugs, but a single root cause- we could miscategorize a fixable issue

I do not believe adjusting a definition will fix the above issues- possibly different tools or methods to run the tests would reduce the concerns there.